Researchers have developed a new AI system that moves autonomous vehicles beyond simply seeing pedestrians to anticipating their next actions. Credit: Stock

A new AI model is demonstrating an unprecedented ability to anticipate human actions by interpreting visual and contextual cues in real time. Rather than simply reacting to movement, the system reasons about what people are likely to do next.

Researchers from the Texas A&M University College of Engineering and the Korea Advanced Institute of Science and Technology have introduced a new artificial intelligence (AI) system called OmniPredict, designed to improve safety for self-driving cars.

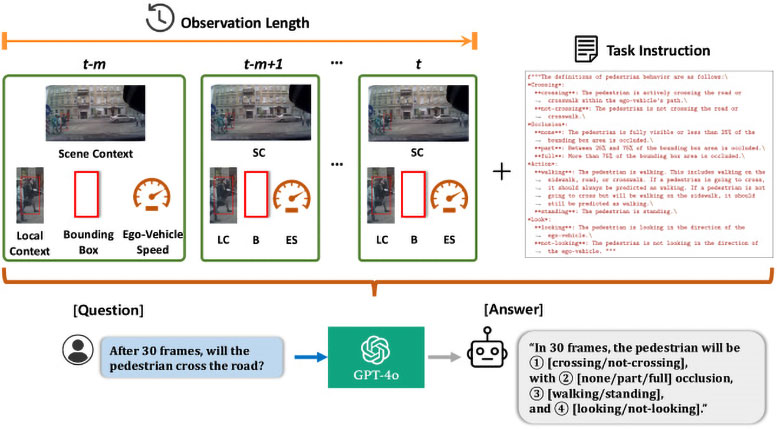

OmniPredict is the first system to use a Multimodal Large Language Model (MLLM) to forecast how pedestrians may behave. It draws on the same kind of underlying technology used in advanced chatbots and image recognition, but its goal is different. By pairing what it sees with contextual details, the system aims to predict, in real time, what a person is likely to do next.

Early testing has attracted attention, indicating that OmniPredict can deliver notably high accuracy even without specialized training.

“Cities are unpredictable. Pedestrians can be unpredictable,” said Dr. Srinkanth Saripalli, the project’s lead researcher and director of the Center for Autonomous Vehicles and Sensor Systems. “Our new model is a glimpse into a future where machines don’t just see what’s happening, they anticipate what humans are likely to do, too.”

A new kind of ‘street smarts’

As developers push to make autonomous driving safer, OmniPredict adds a new layer of street awareness that moves closer to human-like intuition.

Instead of only responding to a pedestrian’s current movement, it attempts to anticipate what that person will do next. If it works as intended, this approach could influence how autonomous vehicles operate in dense urban settings and navigate busy streets more smoothly.

Dr. Srinkanth Saripalli and the Texas A&M University research team’s new breakthrough AI pedestrian system. Credit: Texas A&M University College of Engineering

“It opens the doors for safer autonomous vehicle operation, fewer pedestrian-related incidents and a shift from reacting to proactively preventing danger,” Saripalli said.

The psychological landscape could shift too.

Imagine standing at a crosswalk and, instead of locking eyes with a human driver, knowing that an AI vehicle is tracking your position and is planning around your next likely move.

“Fewer tense standoffs. Fewer near-misses. Streets might even flow more freely. All because vehicles understand not only motion, but most importantly, motives,” Saripalli said.

Beyond crosswalks: Reading human behavior in complex environments

OmniPredict’s implications extend far beyond bustling city streets, chaotic intersections, or crowded crosswalks.

“We are opening the door for exciting applications,” Saripalli said. “For instance, the possibility of a machine to capably detect, recognize, and predict outcomes of a person displaying threatening cues could have important implications.”

Broadly, an AI system that reads posture changes, hesitation, body orientatio,n or signs of stress could be a game-changer for personnel involved in military and emergency operations.

An overview of OmniPredict: GPT-4o-powered system that blends scene images, close-up views, bounding boxes, and vehicle speed to understand what pedestrians might do next. By analyzing this rich mix of inputs, the model sorts behavior into four key categories—crossing, occlusion, actions, and gaze—to make smarter, safer predictions. Credit: Dr. Srinkanth Saripalli Texas A&M University College of Engineering. https://doi.org/10.1016/j.compeleceng.2025.110741

“It could help flag and alert early indicators of risk, or even provide an extra layer of situational awareness,” Saripalli said.

In these scenarios, the new approach might give personnel the ability to rapidly interpret complex environments and make faster, more informed decisions.

“Our goal in the project isn’t to replace humans, but to help augment them with a smarter partner,” said Saripalli.

Putting it to the test

Traditional self-driving systems rely on computer-vision models trained on thousands of datasets and images. While powerful, these models struggle to adapt in changing conditions.

“Weather changes, people behaving unexpectedly, rare events, and the chaotic elements of a city street all could possibly affect even the most sophisticated vision-based systems,” Saripalli said.

OmniPredict takes a different approach.

The result is an AI that doesn’t just see a scene; it interprets it and anticipates how each element might move, adjusting in real time.

The team tested OmniPredict against two of the toughest benchmarks for pedestrian—JAAD and WiDEVIEW datasets— behavior research, without having administered any prior specialized training.

The findings, published in Computers & Engineering, reported that OmniPredict delivered a resounding 67% accuracy, outperforming the latest models by 10%.

It even maintained performance when the researchers added contextual information, like partially hidden pedestrians or people looking toward a vehicle.

The AI also showed faster response speeds, stronger generalization across different road contexts, and more robust decision-making than traditional systems — encouraging signs for future real-world deployment.

“OmniPredict’s performance is exciting, and its flexibility hints at much broader real-world potential,” Saripalli said.

Turning the corner of autonomy and anticipation

While still a research model and not a road-ready system, OmniPredict points to a future where autonomous vehicles rely less on brute force visual learning and more on behavioral reasoning.

By combining reasoning with perception, the system unlocks and enables a new kind of shared intelligence — where the world isn’t just getting automated, it’s getting profoundly more intuitive.

“OmniPredict doesn’t just see what we do, it understands why we do it and can now predict when we are likely to do an action,” Saripalli said.

If AI-powered cars can read our next move, the road ahead just got a whole lot smarter.

Reference: “Multimodal understanding with GPT-4o to enhance generalizable pedestrian behavior prediction” by Je-Seok Ham, Jia Huang, Peng Jiang, Jinyoung Moon, Yongjin Kwon, Srikanth Saripalli and Changick Kim, 18 October 2025, Computers and Electrical Engineering.

DOI: 10.1016/j.compeleceng.2025.110741

Never miss a breakthrough: Join the SciTechDaily newsletter.

Follow us on Google and Google News.

Comments(0)

Join the conversation and share your perspective.

Sign In to Comment